In previous post I hinted at a new idea, but let me explain first what the new DNGMonochrome will offer. And yes, I know I'm going slow, promises promises, but this whole transformation was way more work than I had anticipated. I assure you it's not vaporware.

What's new

New is the user interface. Away with those small previews. You will now get the full image after conversion, able to zoom in to 800%, drag the photo around etc. Mostly based on Lightroom-like functionality.

Away with the clutter: no more noise reduction or deblurring (DNGDeblur will get a similar transformation, but you'll have to use the separate program).

Away also with the RGB filters. Nice experiment, but it came too close to true color interpolation and was dependent on white balancing, which I couldn't show, since there's no full color photo around. I'm not totally convinced though there wasn't an upside to it, so I might bring it back in a later version. Then hopefully a little bit more intuitive.

An improvement: you don't have to select RAW filters upfront anymore (you still can, but it's not necessary). If you run the conversion as three plane, you can filter in hindsight and save the photo once it looks okay to you.

Another improvement: added a histogram for the RAW result.

And a nasty one to get right, but I think I've finally got it: portrait photos are rotated properly.

What stays is the option to produce a monochrome photo without interpolating at all, by cutting away the red and blue and one of the greens. It's a very nice way to get to a small 'true' monochrome photo, without any of the interpolation artifacts (if you can live with 25% of the original size and a 50% reduction in width and height). It's also a handy option to experiment with the RAW filters, because it's all a lot faster. Then when you have the right settings, go for the full interpolated one.

New also: more algorithms. Apart from the one already in there, three new ones are added.

And of course more camera support. Most of the Leicas (M8 till M10, included the 240, 262, 262 M-D, the CL, SL, S, S2 and T), the Canons (EOS 350D, EOS 500D, EOS 550D, EOS 30D, EOS 40D, EOS 60D, EOS 5D Mark II / III), the Nikons (D200, D800, D800E) and the Sony A7s. For all the non Leicas the one condition remains: you'll have to convert the RAW (which should be a full RAW, not some smaller format = untested) to DNG first, with Adobe DNG Converter or Lightroom (export as DNG). I've also made some improvements handling the more quirky combinations of tags in a DNG, which should make it easier to add new cameras and better fulfill the promise of DNG as a universal format.

The algorithms

I have to remind you that we're pixel peeping. All the stuff discussed here needs at least 400% magnification to become visible. When it comes to algorithms, it's more nerdy and less photography. But well... when in pursuit of 'the best', it can't harm to focus on the extreme details.

VNG - Variable Number of Gradients - up til now it was the only algorithm used in DNGMonochrome and it will be fully implemented (the current one has a few tweaks that will disappear). VNG is strong on diagonals and arcs, doesn't produce excessive noise, isn't too bad when sharpening with Lightroom. Weak point: it can zipper on highlighted edges.

ACP - Adaptive Color Plane - I like ACP. It's the simplest of the four serious ones I'm implementing. It's fast, it's gritty, it's very strong on horizontal and vertical edges. Weak point: it can mess up diagonals (saw tooth like artifacts or 'jaggies'). When sharpened up it's comparable to VNG, but overall it's less soft than VNG (mind you, 400% to 800% magnification, else you won't see the difference).

AHD - Adaptive Homogeneity Directional - AHD is almost perfect. It's strong on every edge, it doesn't produce zippers, it's a little bit 'gritty', which I like. But really, there's no such thing as the perfect algorithm. Weak point: AHD can look quite nasty when you start sharpening it up in Lightroom. There are patterns everywhere. A bit maze like. And this isn't completely solved by balancing the greens (only made less apparent). The patterns gives the sharpened up photo a glazed-over look, like the image is behind broken glass. But if you do not intend to sharpen excessively or if you have a better (different) sharpener than Lightroom (or don't mind tweaking the sharpening with the masking and detail sliders), AHD is hard to beat.

LMMSE - Linear Median Mean Square Error - LMMSE is the most complex one (although AHD also has some tricks). It sharpens up very nicely and it's the least noisy one. When I read up on it I realized it was partly the implementation I had been searching for (under the theoretical assumption that the color channels should be able to 'help' reconstruct the green channel in a more profound way than the other algorithms did - I was convinced that could be a strategy, but I had no clue how). It's strong in every aspect. However, despite the fact you can't argue the results, it's my least favorite one. Regarding artifacts it's probably the best, out of the four presented in DNGMonochrome, but it looks very much like a Lightroom photo turned black and white. I think it's just a bit too smooth for my taste. No grittiness or a bit of bite. Maybe the noise gets eradicated too well. However, seeing all its strong points, don't let my opinion hold you back. Especially on high ISO photos, LMMSE might be the one best suited.

Since LMMSE is complex, I've currently only finished implementing the single plane version, so when you use LMMSE, you can't filter (yet).

For completeness and comparisons I also added bilinear, but I want to urge you not to use that one for anything serious. It's really second rate in all aspects.

On speed the order is (fastest first) measured in single plane: ACP, LMMSE, VNG. AHD is left out here, since that one can't be used in single plane mode.

Green imbalance

This has been an issue from the start. Most algorithms assume G1 and G2 in the Bayer layout are more or less the same value, or at least hold a correct value. In practice this is not the case. The divergence between G1 and G2 is a result of influence from the other channels (red or blue). This was very apparent on the Leica M8 and M9, where VNG produces highly visible maze patterns in areas where red is dominant. One of the greens on the CCD of these Leicas is clearly influenced by what's happening in the red channel. The M8 is more sensitive to this problem than the M9, but both are problematic (I recently discovered the Leica S2 suffers even more from this problem, where the S doesn't so much). By now I've improved the algorithm that tackles this problem, but that's not the whole story. This issue is quite an issue, because it's not only dependent on the camera used, but also on the algorithm used. VNG needs correction on the M8 and the M9, but in other M models - as far as I can tell - correction is hardly necessary. For AHD that's a different story. AHD causes stronger patterns - mostly visible when sharpening - on all the Leica models, when G1 and G2 are too far apart (probably also on the Canons, but I haven't tested the full set yet). The problem here is that it's hard to find the right amount of correction, since it's unclear per sensor under what conditions the greens diverge most or where in the photo the algorithm suffers most from the divergence (shadows, highlights, mid tones, certain colors etc). So the new DNGMonochrome will contain a special module, where this stuff can be set and changed per camera and per algorithm. It's set already by me to some defaults I think are okay, but since my testing is limited to only a few photos per camera, I can't fully judge every situation in which these problems might show. Of course, this undercuts the whole idea that 50% of the photo stays the green as recorded. That percentage drops once you start correcting one of the greens. On the other hand: the recorded value wasn't correct to start with. The recorded green wasn't what really hit the sensor at that spot, which makes the whole point slightly moot.

A genuine idea?

So, I was going back and forth between these algorithms, being frustrated about parts of some photos working better with algorithm A, when I thought the total photo looked better with algorithm B or C, when it suddenly dawned on me: why can't we interpolate photos with more than one algorithm? Why is it a choice upfront and then you're stuck with it for the total photo? Let alone programs like Lightroom, that don't want to bother you at all with this stuff and don't even give you a choice upfront (or 'at all').

Well... I can think up a few reasons why. Good reasons.

But not good enough reasons to hold back my nerdy brain waves.

So I decided to do it.

Yes.

In the new DNGMonochrome you can interpolate one DNG (the same DNG) with all the provided algorithms.

Different parts can be interpolated differently.

Now, don't tell me that's not a genuine idea!

I will elaborate in a next post.

When is it finally ready?

Well, I'm currently finishing up on testing all the algorithms with the different cameras. All the new stuff has to work with every DNG that's supported. Especially the rotating and the possibility to interpolate different parts of the photo differently can give unexpected results with different cameras. There's still quite a few bugs to kill, so I can't give an exact date. But I hope to be releasing the official 1.0.0 before the end of November (2018). I might release an unstable bèta before that. It's not a promise though, so don't hold me to it.

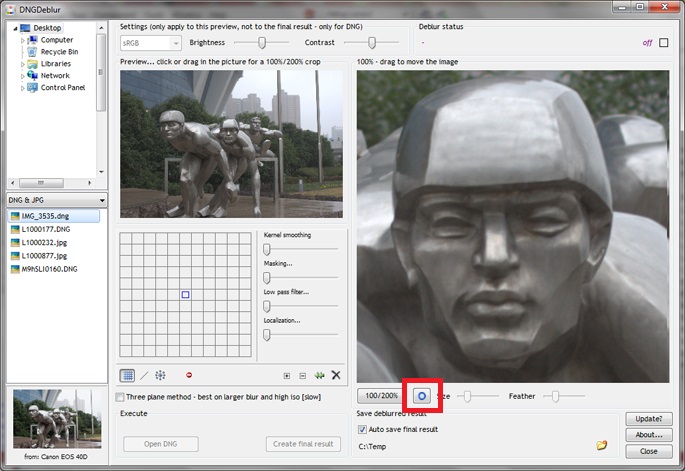

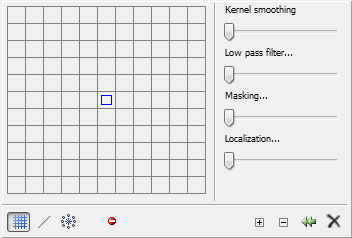

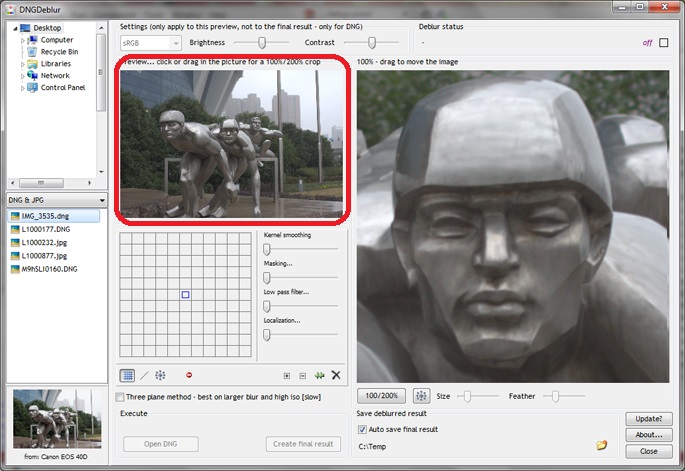

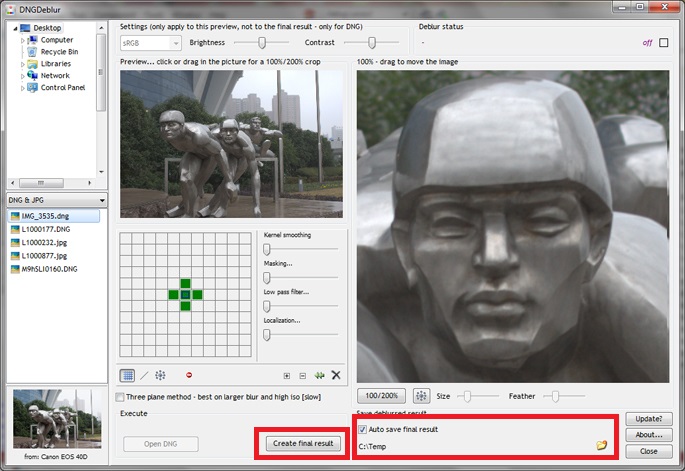

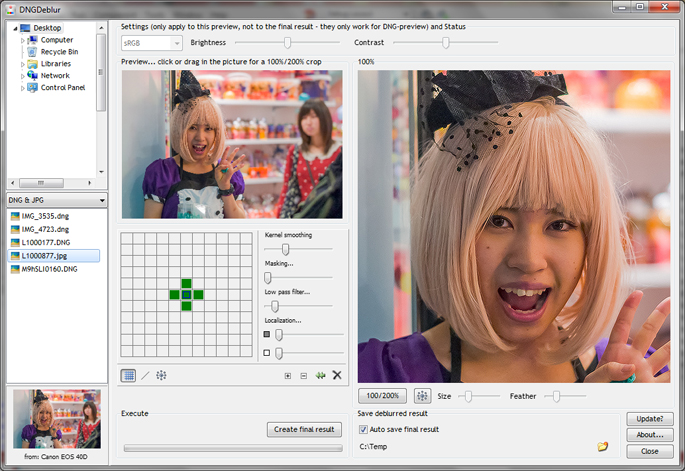

Button to turn on the deblur circle mode...

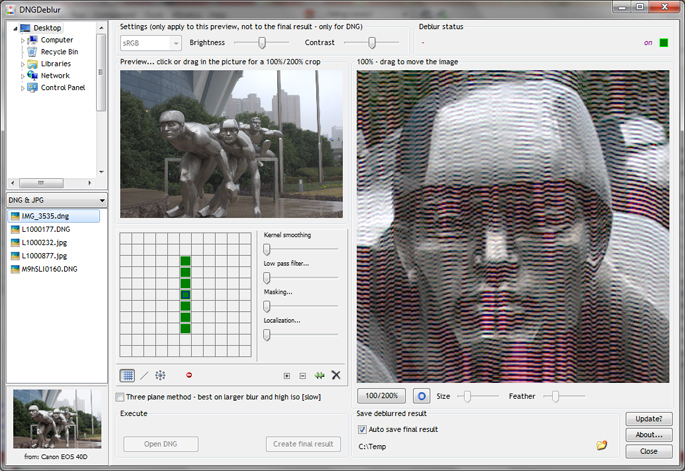

Button to turn on the deblur circle mode... No circles placed, the whole photo is deblurred (ugly deblurring shown deliberately)...

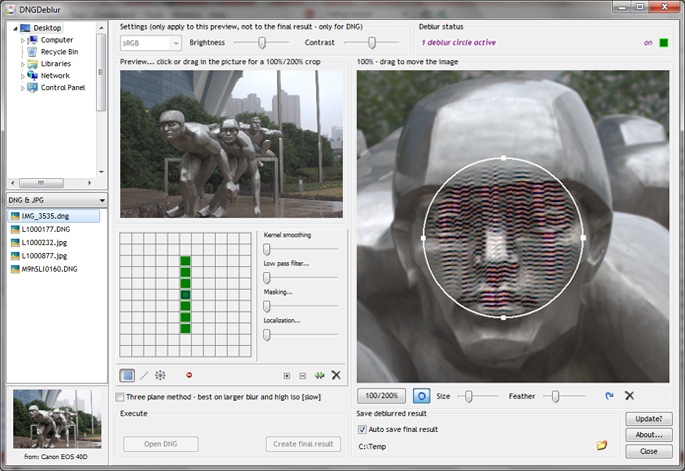

No circles placed, the whole photo is deblurred (ugly deblurring shown deliberately)... One circle placed, the deblurring is confined (ugly deblurring shown deliberately)...

One circle placed, the deblurring is confined (ugly deblurring shown deliberately)... Option to select the slower three plane method for DNGs...

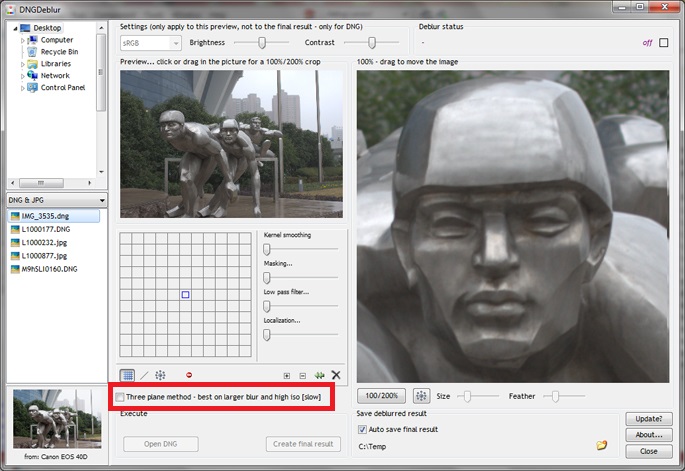

Option to select the slower three plane method for DNGs...

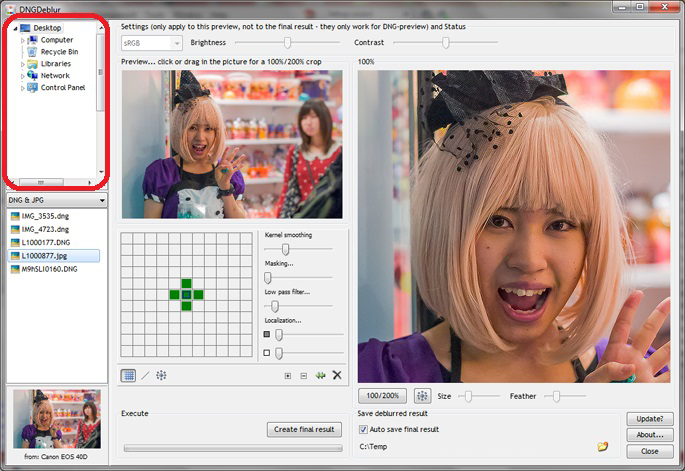

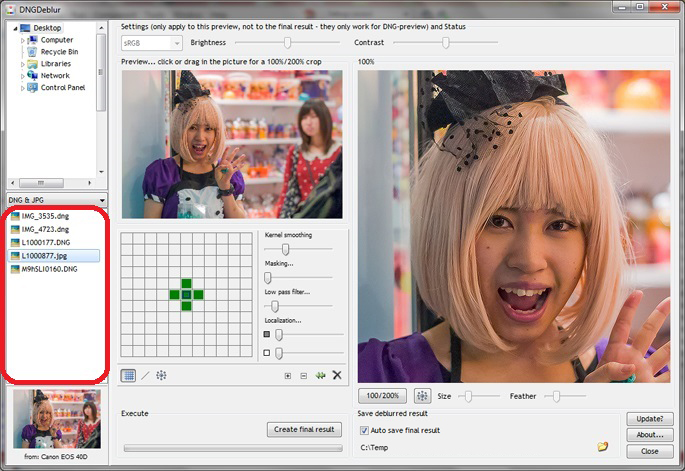

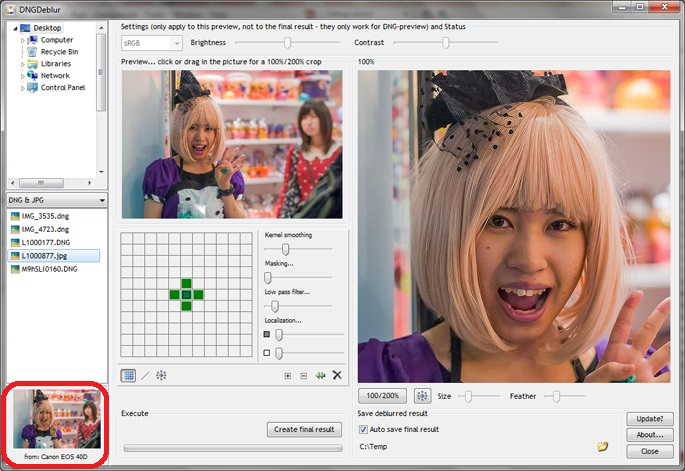

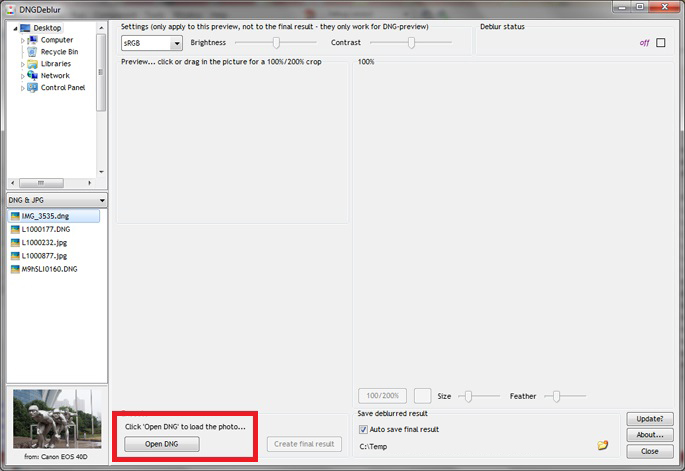

In this list you select the folder your DNGs or JPEGs are residing...

In this list you select the folder your DNGs or JPEGs are residing... The DNGs and or JPEGs will then show up in this list...

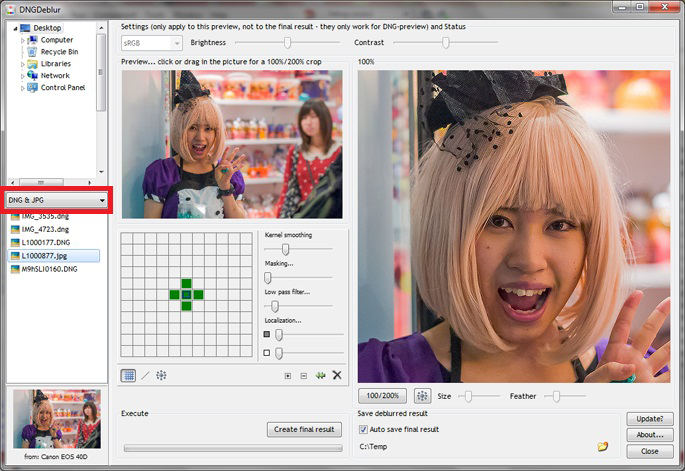

The DNGs and or JPEGs will then show up in this list... In the middle you can select if you want to see DNGs and JPEGs, or only DNGs or only JPEGs...

In the middle you can select if you want to see DNGs and JPEGs, or only DNGs or only JPEGs... When you select a DNG or JPEG, you will see the preview image here... under it the camera model the photo was taken with. Note though that for JPEGs you will not see the camera model (yet), but an indication if the JPEG is 8-bits or 24-bits per pixel. This screenshot is from an earlier version and shows one of those many bugs I'm still solving, since it's a JPEG selected and this photo was not taken with the Canon 40D...

When you select a DNG or JPEG, you will see the preview image here... under it the camera model the photo was taken with. Note though that for JPEGs you will not see the camera model (yet), but an indication if the JPEG is 8-bits or 24-bits per pixel. This screenshot is from an earlier version and shows one of those many bugs I'm still solving, since it's a JPEG selected and this photo was not taken with the Canon 40D... When you select a DNG you have to click 'Open DNG' before you can start working on the photo. This button won't show if you select a JPEG.

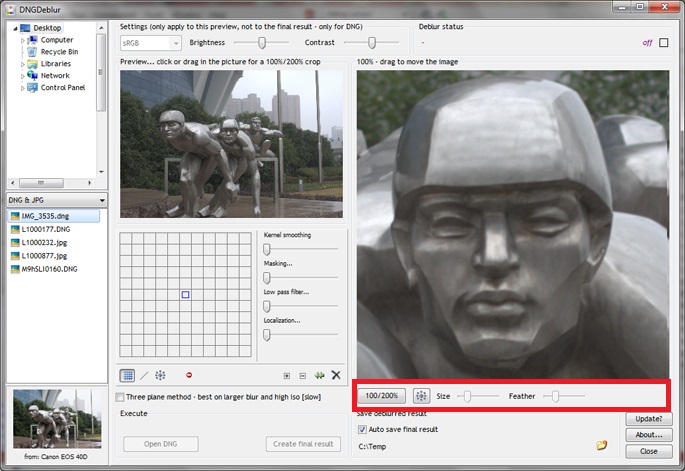

When you select a DNG you have to click 'Open DNG' before you can start working on the photo. This button won't show if you select a JPEG. Drag or click in the smaller image to show a 100% view of your selection on the right... You can also drag the big image around with your mouse...

Drag or click in the smaller image to show a 100% view of your selection on the right... You can also drag the big image around with your mouse... Buttons and sliders under the big image...

Buttons and sliders under the big image... Click the 'Create final result' to produce the deblurred photo, which is then saved automatically if you have turned on 'Auto save', with a valid folder...

Click the 'Create final result' to produce the deblurred photo, which is then saved automatically if you have turned on 'Auto save', with a valid folder...

Screenshot of a first version - the official release will look slightly different...

Screenshot of a first version - the official release will look slightly different...